Cashfree payment gateway pricing, charges, features, and faqs

Ecommerce is booming in India, and so are Digital payments, isn’t it? Despite the pandemic, or maybe, because of it, more eCommerce stores are popping up. Whatever the situation, the growing eCommerce industry...

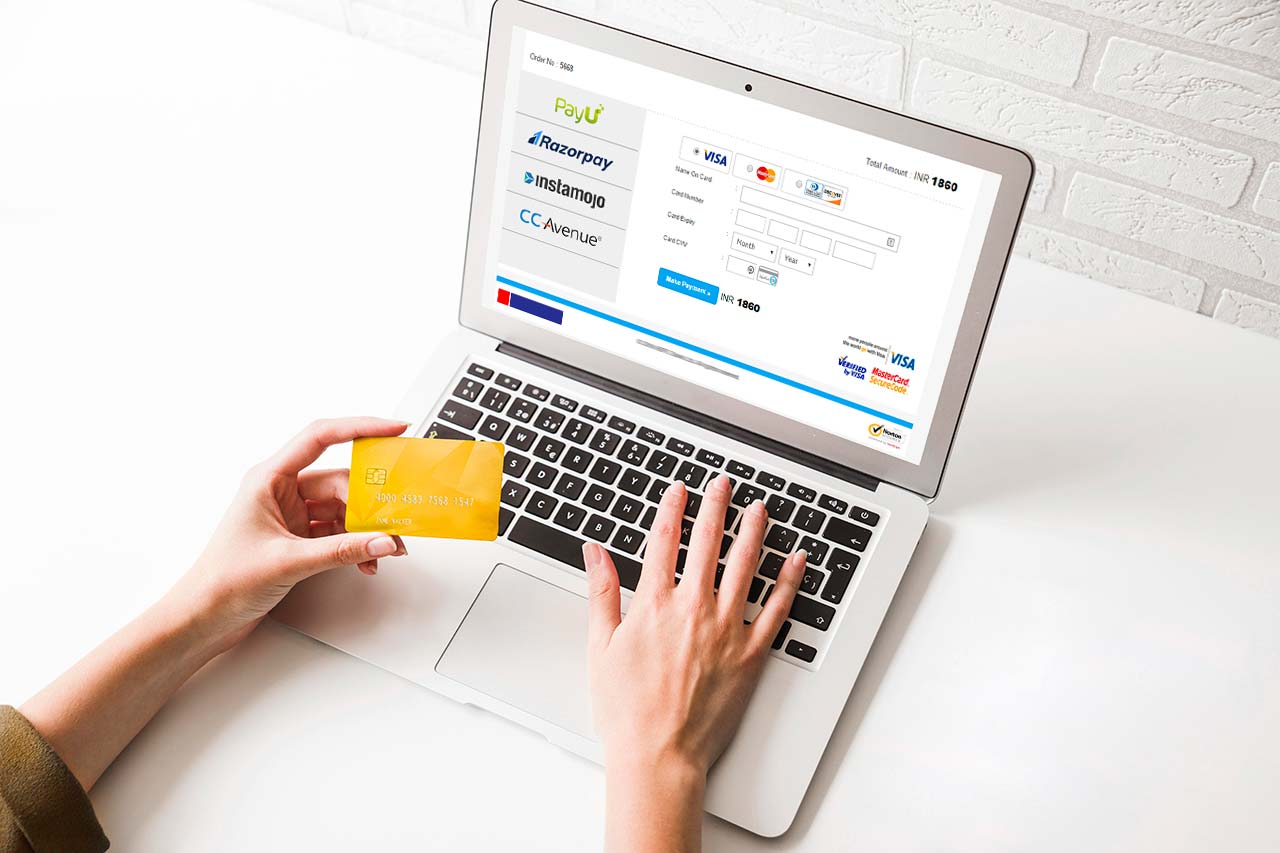

Comparing the Top 13 Payment Gateways in India and How to Choose the best

Are you trying to decide on the right payment gateway in India? No worries, we got your back! Making payments using digital payment gateways in India is on the rise, and with the introduction of the online shopping...

2D Payment Gateway: What Is It & How Is It Different From 3D Payment Gateway?

Wondering what kind of payment gateway to use for your online business? You might be wondering whether to use a 2D payment gateway. You are probably also reading about the security benefits of 3D payment gateways....

Top 10 Frameworks For Creating Serverless Apps In 2021

Introduction Serverless frameworks are the way of the future. Gone are the days of spending countless hours on infrastructure and maintenance when you can have a serverless app with just a few clicks. Serverless is...

How To Structure A Website So It Satisfies Users: 7 Essential Tips

Introduction One of the biggest things to keep in mind when designing a website is user satisfaction. If people don’t have a good experience on your website, they are unlikely to return or tell anyone about the...

Mobile App Design Guide: Step-by-Step UI/UX Process

As an entrepreneur, your biggest important resource is time. While a quick internet search will yield a wealth of rules and ideas for developing a flawless design, separating the valuable material from the noise is...

The Key Differences Between Native and Hybrid Mobile Solutions

In this article, we will discuss native app vs hybrid app. With the world moving towards a mobile-first approach, it has become more crucial for enterprises to invest in mobile app development in India and launch...

Top Trends and Future Scopes of DevOps

In this article, we’ll discuss DevOps trends and its future scope. DevOps is a newfound technique used in the field of digital marketing. It refers to a combination of “development” and “operations”. Software...

Website Design Tips: 8 Ways To Create a Great Looking Website That Ranks Well

Read about the best Website Design Tips that help in giving the best guidance. Making a small business success will require an entrepreneur to be tech-savvy. Modern business owners have to create an online presence...

Payment Gateway Charges In India: A Comprehensive Guide

If you plan to accept digital payments in your business, then you need to know about the payment gateway charges. Payment gateways are technology solutions. They capture and transfer payment data from customers to...