The Dangers of AI – Artificial Intelligence

Artificial Intelligence (AI) is one of the most talked-about cutting-edge technologies, and many businesses, governments, and other organizations are using it for various reasons. The promising technology can transform many aspects of our life, however, are there any dangers of Artificial Intelligence (Al) we should worry about?

Indeed, AI can have an adverse impact on many business and societal aspects, e.g., cybersecurity, national security, politics, and even the reputation of people. Should you use AI in your organization? You surely should, however, you also need to know the dangers of AI. Read on, as we explain them.

The world before AI

We take “Google search autocomplete” and Apple Siri for granted, therefore, it can sometimes be hard to imagine the world before AI! Well, that world was quite different, and the key reason for that was that computers couldn’t remember what they did.

Computers at that time could only execute commands. They couldn’t store these commands to autonomously use them at a later time. These computers couldn’t grasp when a situation arose that was similar to a situation in the past since they couldn’t “learn”.

Automation was rule-based, and it required explicit programming to implement automation. Artificial Intelligence (AI) changed that.

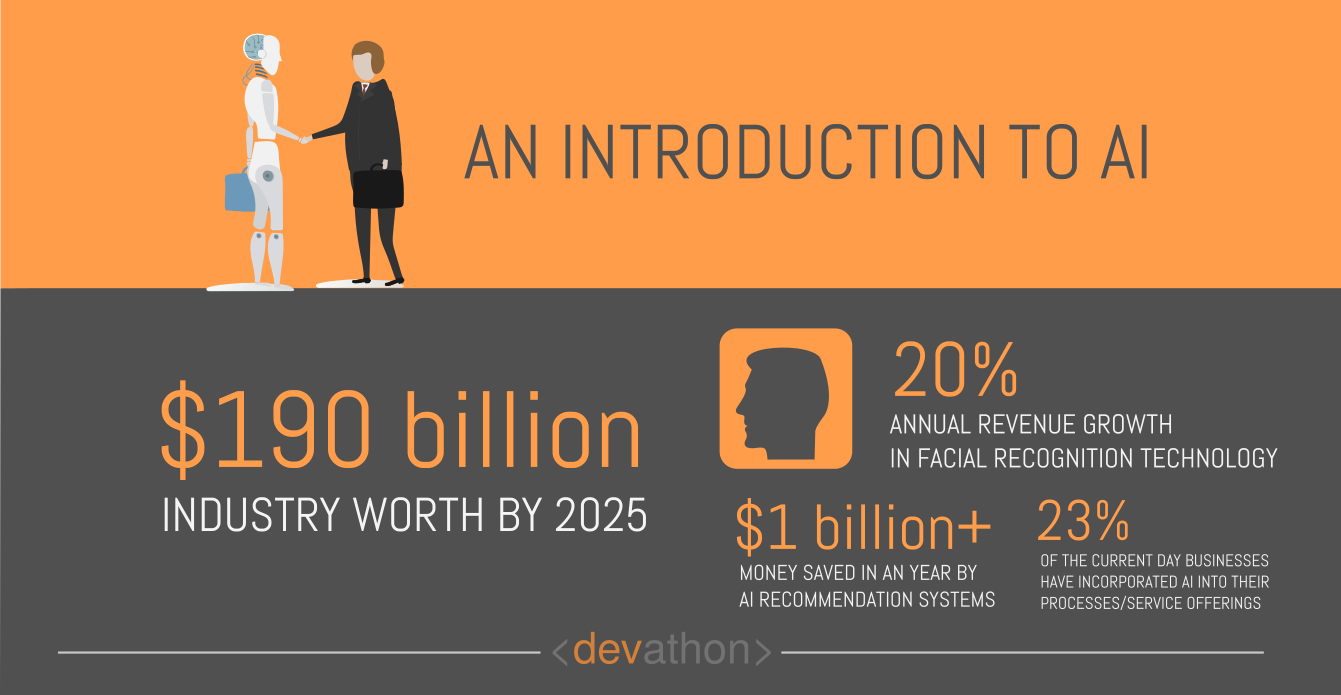

An introduction to AI

AI is an interdisciplinary branch of computer science that enables machines to “learn” from “experience”, which translates to a large data set. It enables machines to adjust to varying inputs without human intervention, which allows machines to perform tasks that could only be done by human beings earlier. AI uses mathematical algorithms to analyze a vast amount of data and “learn” from it.

Alan Turing, a British scientist had started the research in this field, and AI has a long and eventful history. The term “Artificial Intelligence” emerged in a 1956 conference that took place at Dartmouth College, located in Hanover, New Hampshire, USA. The research and development concerning AI had many twists and turns.

A significant part of this computer science has been commercialized as a technology, however, a good deal of research and development continues on other streams of AI. In terms of what the researchers have achieved so far, the following are the categories of AI:

- “Reactive AI”: Systems equipped with this category of AI can carry out the tasks at hand, however, they don’t have a concept of the past. “Deep Blue”, the chess-playing computer from IBM belongs to this category.

- “Limited Memory AI”: Machines equipped with this category of AI can go back to the past to a limited extent. Self-driving cars use this kind of AI.

- “Theory of Mind AI”: Researches and developments are still in progress on this category of AI, and it focuses on creating machines that can understand the thoughts, emotions, and intentions of other human beings or similar machines.

- “Self-Aware AI”: Somewhat at an early stage of research, this type of AI will enable machines to form a representation of themselves and have consciousness.

There are various AI capabilities and these include various branches of technologies. These are as follows:

- “Machine Learning” (ML): This encompasses deep learning, supervised learning, and unsupervised learning.

- “Natural Language Processing” (NLP): Content extraction, machine translation, classification, etc. belong to this capability.

- “Vision”: This capability includes machine vision and image recognition.

- “Speech”: This capability includes speech-to-text and text-to-speech branches of technologies.

The other key AI capabilities are expert systems, planning, and robotics.

The promise of AI

The various capabilities described above represent a quantum leap from the simple rule-based automation of earlier decades. Computers can now “learn” from massive sets of data, therefore, they can adjust to new inputs. Over time, AI-powered systems improve their performance on a given task. 2021 update – AI and machine learning are now a standard feature in Microsoft Power BI.

Not surprisingly then, AI has significant potential. Organizations can use it for various purposes like customer service, cybersecurity, predictive maintenance, speedy processing of large-scale patient data, sensor data analysis, etc.

Various industries have promising AI use cases. Healthcare, manufacturing, agriculture, national security are just a few examples of sectors that can benefit from AI. Gartner projects that AI augmentation will create business value worth $2.9 trillion in 2021.

The dangers of AI

A school of thought holds that science is morally neutral. While that’s debatable, what is undeniable is that highly promising branches of science and technology can also pose dangers, and they often do.

Artificial Intelligence (AI) isn’t an exception. By their very nature, AI-powered systems can increase the distance between human beings in various ways, e.g., promoting anonymity. You often don’t know what analytics Google and Facebook derive from your photos using their image recognition software.

AI is still a field under considerable research and development. As a result, it has vulnerabilities that can be exploited by entities with questionable intentions.

Modern software development owes a lot to open-source development, and that’s true for AI as well. The downside of it is that unscrupulous entities can easily get hold of open-source AI algorithms and create powerful threats.

We will now examine a few such threats, which are as follows:

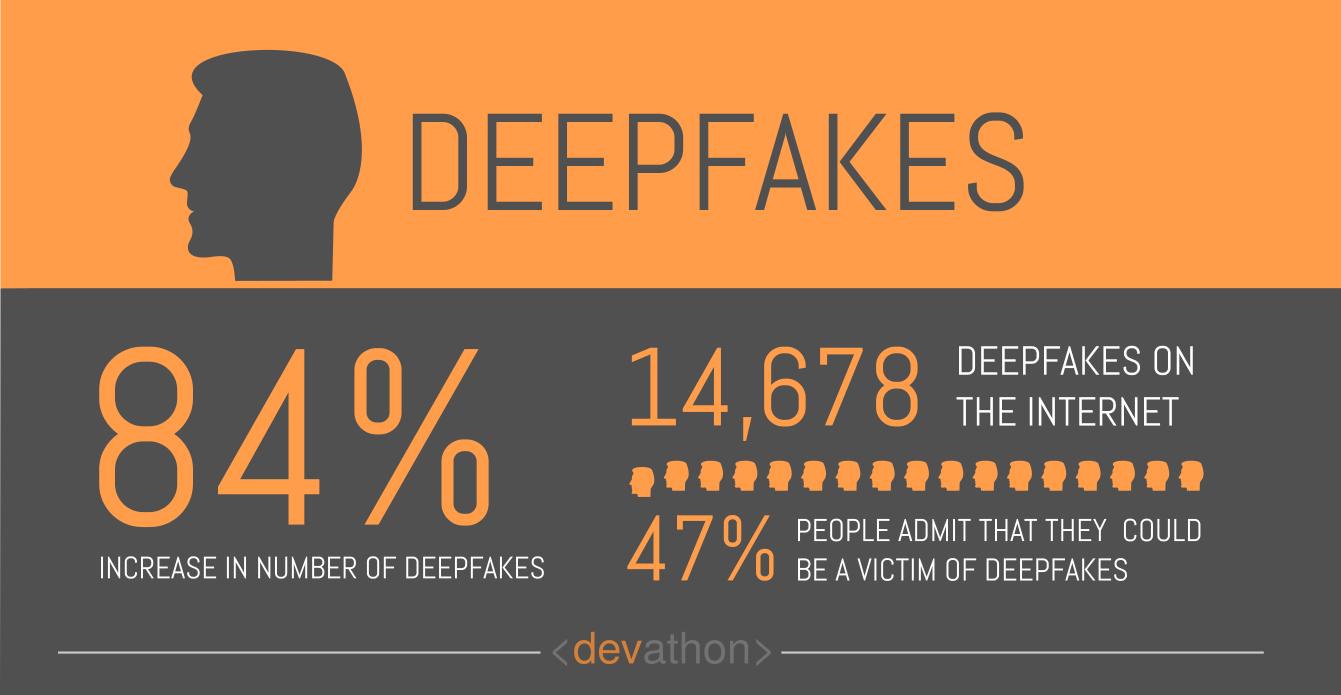

Deepfakes

Altering digital content to defame celebrities and politicians isn’t exactly new. There have been instances of overlaying the faces of celebrities onto characters of pornographic video clips and creating defamatory contents about politicians. Creating such video contents were hard though since video content is hard to fake. It’s one of the famous dangers of ai.

Altering digital content to defame celebrities and politicians isn’t exactly new. There have been instances of overlaying the faces of celebrities onto characters of pornographic video clips and creating defamatory contents about politicians. Creating such video contents were hard though since video content is hard to fake. It’s one of the famous dangers of ai.

“Deepfake” changes that. It’s an application of AI with significant negative potential. Creating fake video contents that seemingly look original is easier with “Deepfakes”. This kind of AI applications uses two components, i.e., the “Generator” and the “Discriminator”.

The generator creates a fake video clip and the discriminator identifies whether it’s fake. Every time the discriminator identifies a video clip as a fake one, it’s an opportunity for the generator to “learn” where to improve during the next iteration.

The combination of these two components is called a “Generative Adversarial Network” (GAN). This contains a large set of video clips to train both the generator and the discriminator. As the generator learns to create better fake videos, the discriminator also learns how to spot fake videos better.

In this way, the system creates increasingly better-quality of videos that are hard to identify as fakes. Anyone without any considerable video-editing skills can use Deepfake tools to create fake videos and use them to further their agenda.

This can make it hard to identify fake videos. There’s also a risk that people will not trust even genuine video content since they know that it’s easy to create fake videos!

In an example of how Deepfakes can be used for targeting politicians, a political party in Belgium posted a fake video in May 2018. The video showed Donald Trump, the US President taunting Belgium for remaining the Paris climate agreement. Expectedly, the fake video generated controversy and the political party in question clarified that it was indeed fake.

At the time of writing this, the world is still grappling with how to prevent Deepfakes from damaging reputations. Inspecting videos carefully to find tell-tale signs like strange blinking, a shift in skin tone and lighting, etc. can help. Governments and lawmakers will need to regulate effectively to curb the dangers of Deepfakes.

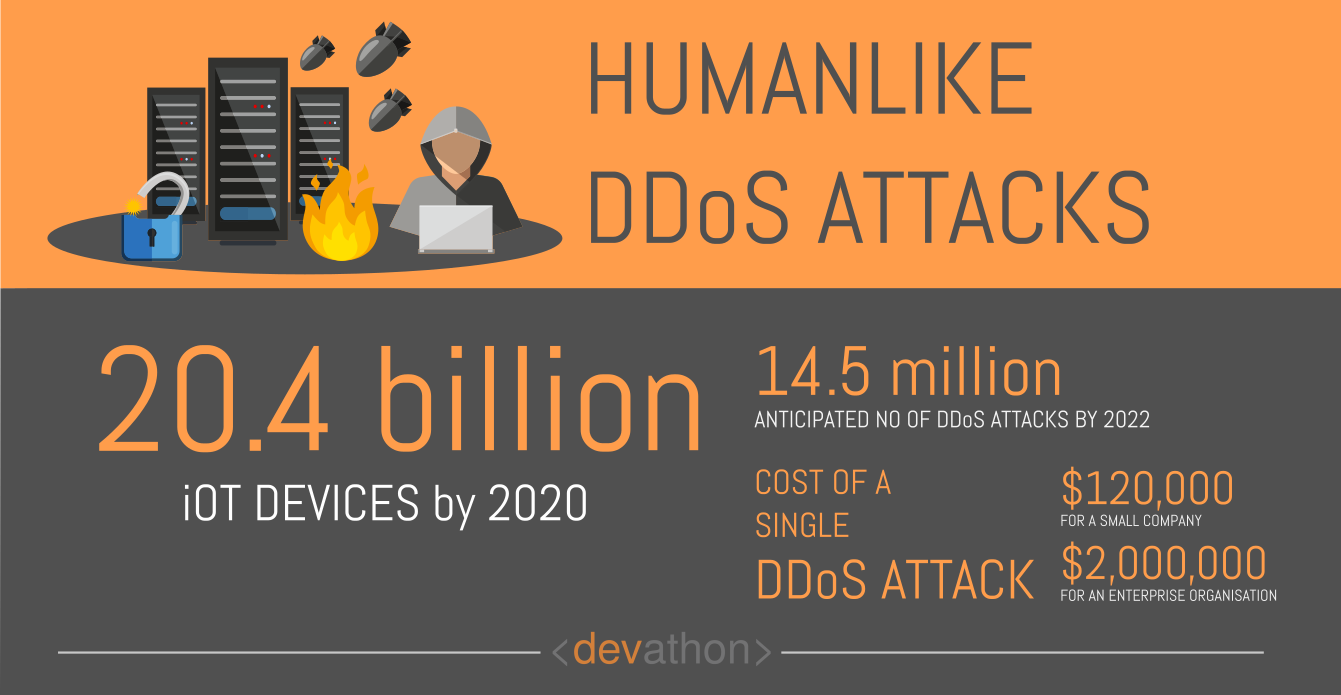

Human-like DoS attacks

“Denial of Service” (DoS) attacks aren’t new, however, they are becoming more and more sophisticated. Human-like DoS attacks are powered by AI, and they are proving hard to address.

A DoS attack is a form of cyber-attack where a cybercriminal prevents legitimate users of a networked system/service/website/application from using it. DoS attacks slow a system down by sending a large number of requests, moreover, they can even cripple a system. Such attacks typically originate from a single source.

The next level is “Distributed Denial of Service” (DDoS), where cyber-attackers launch a DoS attack from multiple sources, however, they coordinate it centrally. Since DDoS attacks have better chances of reducing the availability of an information system, they can often be devastating.

DDoS attacks can be of various types, e.g., volumetric attacks, protocol attacks, etc. In recent years, botnets have become important tools in the hands of cybercriminals launching DDoS attacks. Cybercriminals often use hundreds or even thousands of computers that are connected to the Internet and infect them with malware. Subsequently, they use these botnets to launch DDoS attacks on a targeted information system.

Information security experts worked to prevent DDoS attacks, however, cybercriminals weren’t idle! They upped the game and started Internet-of-Things (IoT) controlled DDoS attacks, and achieved more success. IoT allowed them to control botnets more effectively.

Cybersecurity experts worked to find preventive measures for these, and they achieved considerable success. Cybercriminals still had to explicitly plan and execute these DDoS attacks, and their systems couldn’t learn from the defensive actions and adjust to them. This helped cybersecurity experts.

That’s now changing with human-like DoS attacks. Cybercriminals have started to use AI-powered systems to launch these attacks and supervised and unsupervised ML algorithms play a key part here.

The ML algorithms learn from the defensive mechanisms that organizations employ against DDoS attacks. As a result, improved DDoS attacks involve constantly changing parameters and signatures. This poses serious challenges to cybersecurity experts.

Not surprisingly, the answer to human-like DoS attacks can be found in AI! Organizations might need to employ cybersecurity systems that employ ML unsupervised algorithms, which are stronger than supervised algorithms as far as learning from new information is concerned. This will equip them to cope with new parameters and signatures of human-like DoS attacks.

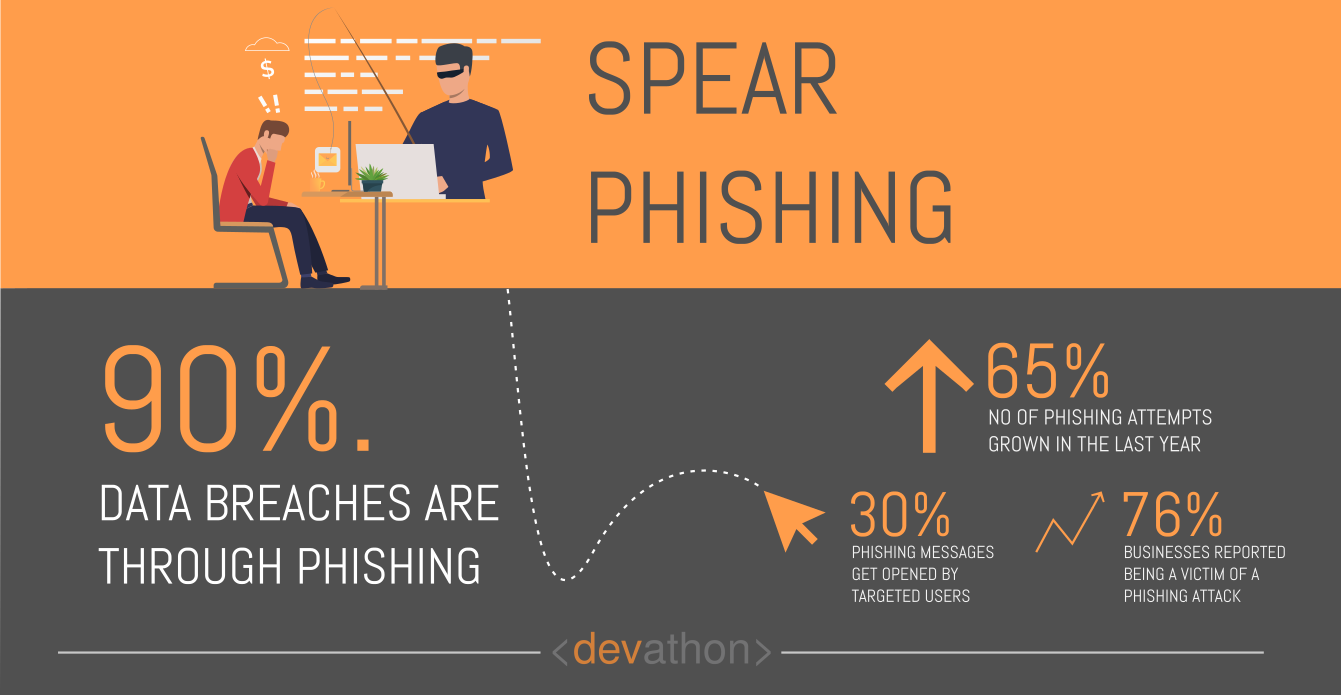

Spear phishing attacks

Phishing attacks have been around for a while now. These attacks are among the popular modes of operation adopted by cybercriminals, and the term phishing refers to attempts to trick people to share sensitive information.

Phishing attacks have been around for a while now. These attacks are among the popular modes of operation adopted by cybercriminals, and the term phishing refers to attempts to trick people to share sensitive information.

Phishing attacks involve cyber-attackers posing as trusted entities and sending emails, phone calls, SMS, etc. to their potential targets. An email as part of a phishing attack could come from a seemingly trusted source, moreover, it might contain a link. When a recipient clicks the link, their computer might get infected by malware that steals their personal sensitive information.

While phishing attacks simultaneously target many potential targets and involve sending mass emails, spear phishing targets specific potential victims. Cyber-attackers launching spear-phishing attacks need to study their targets and customize the messages they send.

Spear phishing attacks need to look original enough to lure the recipients, therefore, they need to include context-specific information. Such emails need to look legitimate so that the recipients don’t doubt the authenticity of the sender. Cyber-attackers using this technique need to obtain a significant deal of personal information of potential victims to create such emails/messages.

Given that cybercriminals deal with a large set of targeted users, creating seemingly authentic emails for each user takes time. This naturally sets some limits to how many spear-phishing attacks they can launch, however, AI is changing that now.

AI-powered spear-phishing attacks pose a significant cybersecurity risk. AI can sift through a large dataset of personal information far quicker than earlier software could do. Thanks to ML algorithms that recognize patterns with datasets, AI can create context-specific emails for launching spear-phishing attacks. These same algorithms can also identify enough information from the large datasets to create highly personalized spear-phishing emails.

How to prevent AI-powered spear-phishing attacks? AI can bring solutions to this too, and efforts are afoot to create such solutions. These solutions involve using classification models and “Artificial Neural Networks” (ANNs) to detect spear-phishing attacks. Such solutions also incorporate AI-powered chatbots to waste the time of the hackers. The combined effect of them can make cybercriminals abandon their attack and find easier targets.

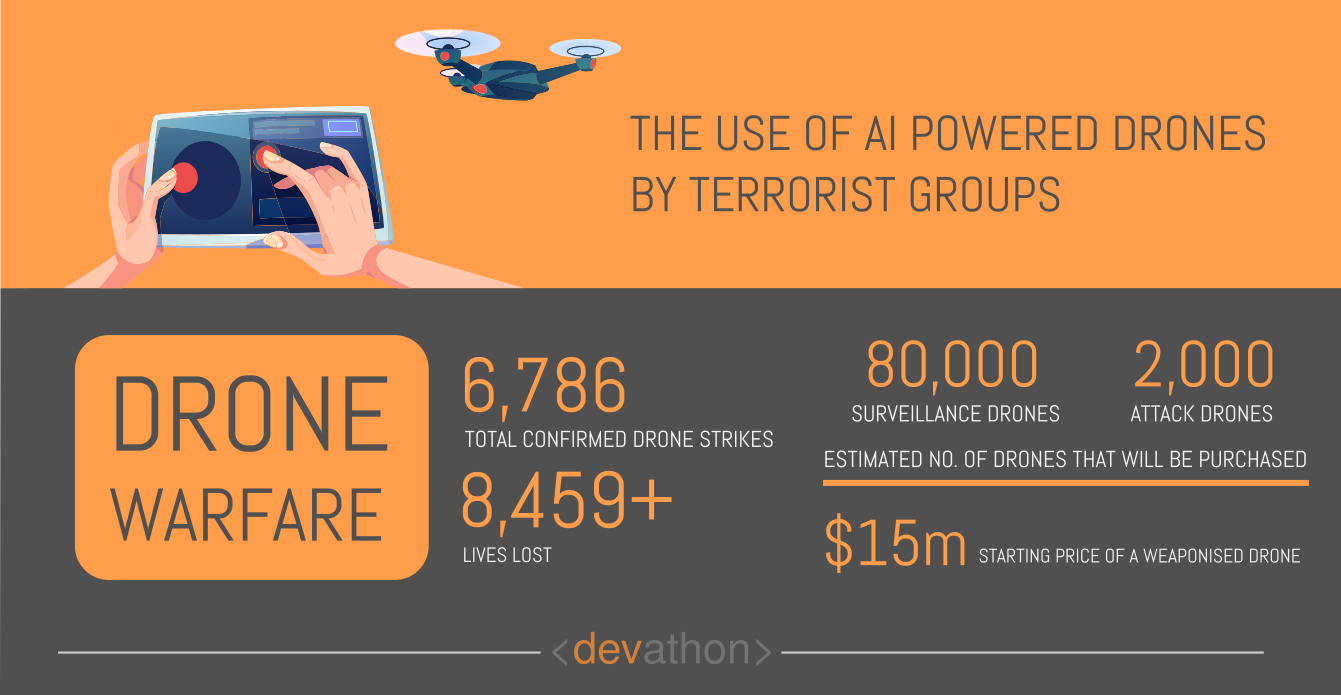

The use of AI-powered drones and explosives-laden autonomous vehicles by terrorist groups

Terrorism is a scourge for humanity and the civilized world, moreover, terrorist groups are increasingly developing more sophisticated warfare capabilities. The advancement of technology has played a key part here.

Terrorism is a scourge for humanity and the civilized world, moreover, terrorist groups are increasingly developing more sophisticated warfare capabilities. The advancement of technology has played a key part here.

Take the case of drones. It’s not that only the market for commercial drones has seen a marked growth, but the usage of military drones has increased significantly, too. Unfortunately, ISIS, the dreaded terrorist group has also acquired killer drones, as it had demonstrated in a 2016 attack in Northern Iraq.

The undeniable truth is that the proliferation of advanced military technologies to terrorist groups like ISIS continues, moreover, these groups are enhancing their capabilities. The easy availability of Artificial Intelligence (AI) systems compound this problem.

If a terrorist group like ISIS intends to use a killer drone, they will need technologies like unmanned aerial vehicles (UAVs), facial recognition, and machine-to-machine communication. UAVs are easily available in the market and they are cheap. Machine-to-machine communication technologies are easily available too.

That leaves facial recognition, which a hostile non-state actor will use for targeting its enemy. Facial recognition is a well-known AI capability, and it’s commercially available.

Unlike many other path-breaking technologies in the past, the research and development of AI aren’t controlled by governments and their militaries. Commercial business enterprises develop AI systems and sell them in open markets. The world is embracing open-source, and this is true in the case of AI, too. Many AI capabilities are available as open-source libraries, which makes them easily accessible to all. As a result, image recognition, facial recognition, etc. technologies could easily reach a dreaded terrorist group, which can launch a terror attack relatively easily.

A terrorist group like ISIS can now take advantage of commercially available technologies and launch terrorist attacks from a considerable distance. It significantly reduces its risks, moreover, it doesn’t cost much. The overall effect amounts to a significant reduction of the threshold to launch a terror attack, and that should concern the global community.

Autonomous vehicles represent another threat. The use of autonomous vehicles is increasing, and even a cab aggregator like Uber is planning to build its fleet of autonomous vehicles. This could change the scenario of how terrorist groups use explosive-laden vehicles to target crowded areas.

At present, terrorist groups recruit suicide bombers that drive explosive-laden vehicles into crowded places. Consider a scenario where such terrorist groups employ autonomous vehicles for this purpose.

They wouldn’t need to employ a suicide bomber, which reduces the threshold to launch a terror attack. Additionally, they control the entire attack from a distance, which further reduces their risks.

Formulating effective and pragmatic regulations is key to preventing AI-powered drones and automated vehicles in the hands of terrorist groups. Commercial players innovating on AI and developing the technology need to be held accountable to international anti-terrorism laws.

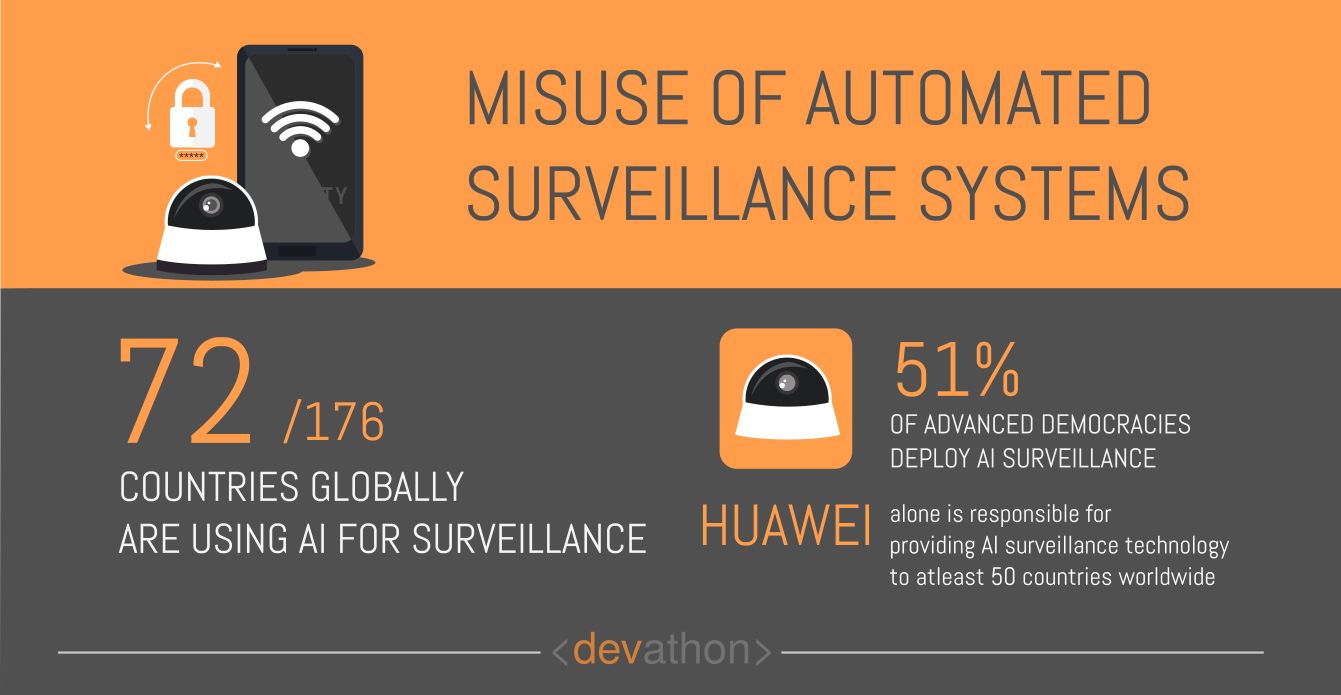

State use of automated surveillance platforms to suppress dissent

Surveillance is important for governments world over since it helps them to secure their people, government installations, high-value infrastructure, and businesses. Governments use human as well as technology-based surveillance to maintain internal security and prevent external threats. That’s certainly legitimate, however, using surveillance to suppress legitimate dissent and target minorities go against the principles of universal human rights.

Surveillance is important for governments world over since it helps them to secure their people, government installations, high-value infrastructure, and businesses. Governments use human as well as technology-based surveillance to maintain internal security and prevent external threats. That’s certainly legitimate, however, using surveillance to suppress legitimate dissent and target minorities go against the principles of universal human rights.

Authoritarian states have always used surveillance as a tool against dissenters, however, they did so at a heavy cost. As the history of authoritarian states show, maintaining intrusive surveillance has always required significant human intervention.

Collecting information about citizens at large-scale and processing it are manpower-intensive tasks. While CCTV helps to gather video footage about sensitive places, the analysis of it remains a time-consuming affair.

AI is changing that. The facial recognition technology can identify people quickly from a video clip. Additionally, the natural language processing technology can help security agencies to find video clips about persons of interest by simply querying the system using the language they speak.

However, such powerful AI-based systems can pose serious ethical problems, too! Given the intrusive surveillance capabilities of such systems, a state with deep-rooted bias against dissenters and minorities can use these systems to suppress dissent.

A case in point is the use of intrusive surveillance by China in its Xinjiang province where Uighurs, an ethnic minority group live. Among many cutting-edge technologies, this Chinese mass surveillance platform in Xinjiang uses facial recognition.

Preventing such misuse of AI for mass surveillance promises to be hard. Effective international legal framework and compliance are important to ensure that a promising technology like AI doesn’t end up suppressing legitimate dissent.

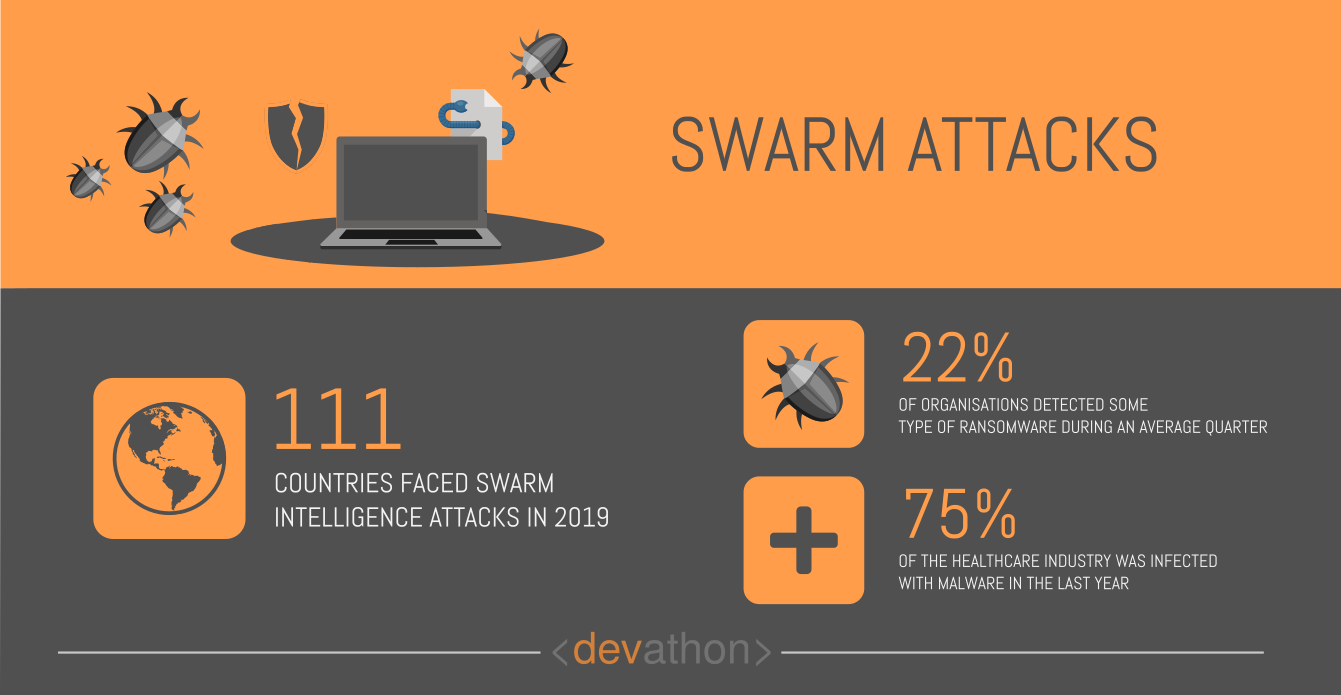

Swarm attacks

Swarm attacks belong to the more recent cybersecurity threats. This kind of attacks utilize a technology called “Swarm intelligence”, which involves swarm-based bots.

Swarm attacks belong to the more recent cybersecurity threats. This kind of attacks utilize a technology called “Swarm intelligence”, which involves swarm-based bots.

The world of technology has already seen cyber-attacks using bots. Such attacks needed considerable intervention from cybercriminals, however, swarm attacks have changed that.

Swarm-based bots can collaborate among themselves and operate autonomously. The significant advances in AI research and development have made this possible.

Fortinet, the cybersecurity company elaborates on how AI-powered swarm attacks might work. The company believes that cybercriminals will replace botnets with “Hivenets”, i.e., clusters of compromised devices that use AI. These Hivenets will have self-learning capabilities, and they will be able to target vulnerable systems at a larger scale and with a greater degree of precision. AI will give them the capability to communicate with each other and coordinate their actions, thereby reducing the need for manual interventions on the part of cyber-attackers.

Swarm attacks played a part in the Equifax data breach between May and July 2017. There was a vulnerable Apache Struts component, and swarm attacks exploited this vulnerability.

Preventing swarm attacks requires a comprehensive approach, which involves the following:

- Broad and consistent deployment of cybersecurity solutions across all ecosystems;

- Deep integration of security into the extended technology landscape of an organization;

- Automation of cybersecurity and its integration across devices and applications.

Automated social engineering attacks using chatbots

Social engineering is a common form of cybersecurity threat, which involves psychological manipulation to induce errors on the part of users of an information system. Such manipulations make users commit mistakes that create information security issues, moreover, users could divulge sensitive information due to such tricks.

Social engineering is a common form of cybersecurity threat, which involves psychological manipulation to induce errors on the part of users of an information system. Such manipulations make users commit mistakes that create information security issues, moreover, users could divulge sensitive information due to such tricks.

Cyber-attackers launching social engineering attacks need to follow a step-by-step approach, where they first investigate their potential targets and gather information, e.g., points of vulnerability. Subsequently, cybercriminals gain the trust of their potential victim.

They play their tricks subsequently, which cause their victims to part with sensitive information or grant access to key information resources. Social engineering attacks rely noticeably on human errors.

As you can see, launching social engineering attacks can be time-consuming. This is where automated social engineering attacks using chatbots have made life difficult for information security experts.

AI and ML algorithms “study” a vast amount of data quickly, and this gives chatbots a better chance to strike seemingly realistic conversations with the potential victims. They have a better chance of tricking people. Additionally, as part of their task of giving answers to users’ queries, they could just guide users to URLs that contain malware.

Imagine a scenario where a group of cybercriminals create a chatbot that looks and acts just like the customer service chatbot of your bank. If you are an unsuspecting user, then you could interact with this chatbot and share sensitive information related to your bank account. The chatbot could also guide you to a URL that will install a malware in your PC, which will steal your sensitive personal information.

You should follow the well-established guidelines for preventing social engineering attacks, however, you need to focus on rogue chatbots. E.g., If a chatbot isn’t from a trusted source, then don’t click the links it suggests. Steer clear from offers that are “too-good-to-be-true” from chatbots, and follow the information security and privacy policies of your organization.

Data poisoning attacks on AI-dependent systems

AI and ML algorithms “learn” from massive data sets to deliver their intended outcome. These algorithms are used in a variety of use cases, e.g., predicting market trends, image recognition, cybersecurity, etc.

AI and ML algorithms “learn” from massive data sets to deliver their intended outcome. These algorithms are used in a variety of use cases, e.g., predicting market trends, image recognition, cybersecurity, etc.

Organizations implementing such AI/ML algorithms first “train” the system with training/test data, subsequently, they take data from actual operational scenarios and retrain these systems. The term “data poisoning” refers to a form of cyber-attack, where malicions players inject bad data into the data sets used for such retraining.

Data poisoning attacks are of two kinds, which are as follows:

- The ones impacting the “availability” of your ML system: In this scenario, the cyber-attacker injects a large amount of bad data. As a result, the statistical boundaries that your ML model had learnt become useless.

- The ones impacting the “integrity” of the ML system: In this case, the cyber-attacker injects data inputs that the designer of the ML model wasn’t aware of. The attacker then gets the ML model to learn what he/she wants, using this new form of input.

Information security experts employ ML algorithms for malware detection, and data poisoning can compromise such algorithms. E.g., a cyber-attacker can train an ML model to treat a file as benign if the file contains a certain string. This is an example of attacking the integrity of the ML model, and the algorithm will classify even malware files containing that string as benign!

Other areas where data poisoning attacks were seen are as follows:

- Malware clustering;

- Worm signature detection;

- DoS attack detection;

- Intrusion detection.

A common example data poisoning attacks concerns the Gmail filters that identify spam emails. Groups expert in spamming use the data poisoning technique to compromise the classifier used in Gmail. The objective of these cyber-attackers is to report spam emails as “not spam”.

You can guard against data poisoning attacks by using the following techniques:

- Data sanitization: This technique focuses on detecting outliers in the data since a cyber-attacker would inject something very different in the data sets.

- You can analyze the impact of the new training data set on the accuracy of the ML model.

- “Human in the loop”: You can have experts concentrate on the statistical boundary shifts caused by the new training data to detect data poisoning.

Denial of information attacks

“Denial of information” is a rather broad term in the context of cyber-attacks, and it includes all types of cyber-attacks that make it hard for users to gather useful and relevant information. Take, for e.g., the spam emails in your mailbox that distract your attention from your task of collecting useful information. Spams swamp your information channels with false or distracting information.

While spam or other forms of denial-of-information attacks aren’t new, they had started to see diminishing returns. Spamming a large number of mailboxes of social media channels took a considerable time for cybercriminals. Powerful spam-filters did a good job of keeping spam out, which reduced their effectiveness.

AI-powered bots made a key difference here. Such bots quickly create a massive amount of spam, moreover, they “learn” to bypass the spam filters. Reports find that over half of social media logins are fraud, and disseminating spam is a key activity of AI-powered bots.

To prevent denial-of-information attacks, organizations need to challenge the cybercriminals with superior technology, which would include AI. E.g., organizations need to go beyond their basic login portals and implement advanced telemetry. Such solutions will analyze the context, behavior, and past reputation of users to classify them as authentic or fake.

Prioritizing targets using AI

Cybercriminals look for vulnerable targets before launching cyber-attacks. Targets with strong information security mechanisms raise their costs, however, vulnerable targets enable them to launch attacks at a lower cost.

Cybercriminals look for vulnerable targets before launching cyber-attacks. Targets with strong information security mechanisms raise their costs, however, vulnerable targets enable them to launch attacks at a lower cost.

Prioritizing targets based on their vulnerability requires comprehensive probing, and that’s time-consuming. AI-powered tools help cybercriminals scan networks and identify vulnerable targets with relative ease.

The answer to this threat lies in mitigating vulnerabilities proactively. Organizations need to undertake comprehensive cybersecurity risk assessments, subsequently, they need to mitigate key risks. They can’t leave security and compliance testing as the last item in a checklist, rather, they need to demonstrate a proactive approach. The “Open Web Application Security Project (OWASP) top ten 2017 project” report provides key guidance concerning this.

Automated, hyper-personalized disinformation campaigns

Disinformation campaigns have been around for as long as warfare existed! Their volume and reach have increased with new media including digital media, e.g., fake news is a scourge that every civilized society is dealing with everyday.

Disinformation campaigns have been around for as long as warfare existed! Their volume and reach have increased with new media including digital media, e.g., fake news is a scourge that every civilized society is dealing with everyday.

Well, as disinformation campaigns have increased in their volume and sophistication, societies around the world have improved their capability to detect them too! Nearly every civilized society has concerned citizens and media watchdogs battling the spread of fake news and disinformation campaigns.

Moreover, it takes considerable time and expertise to create “high-quality” disinformation campaigns that look like genuine news. This tends to limit the volume of content that spread disinformation, furthermore, poorly-created fake news is easy to detect!

AI is changing that! OpenAI, an AI research organization based in California, USA has created GPT-2, an AI system that can create fake news. It works based on prompts, and the content it creates isn’t distinguishable from content written by human beings. There are reports of countries using AI-powered bots to sow discord in other countries.

Fortunately for civilized societies battling fake news and disinformation campaigns, AI also has solutions to detect automated disinformation campaigns. E.g., an AI-powered system named BotSlayer can scan social media in real-time to detect automated Twitter accounts posting tweets in a coordinated manner. BotSlayer can detect trends of fake news and disinformation campaigns, moreover, it can distinguish between real and fake social media accounts.

Conclusion

Nearly all cutting-edge technology in our history had beneficial as well as harmful uses. AI isn’t an exception. While it has a wide range of transformative use cases that can improve the world as we know, AI also poses some clear dangers.

In some cases, developing even more powerful AI solutions could help to mitigate some of the dangers posed by AI. In other cases, effective regulations can prevent the technology from falling into the hands of terrorists, while meaningful compliance with international laws can prevent its misuse by authoritarian states. Judicious use of AI is important if you want to realize true value from this promising technology.

Are you looking for Mobile/Web app Development services? Contact us at hello@devathon.com or visit our website Devathon to find out how we can breathe life into your vision with beautiful designs, quality development, and continuous testing.